January 2007 - Yesterday I found a very interesting application on Google: Image Labeler (http://images.google.com/imagelabeler).

The goal is for Google to use their fan base to add descriptive keywords to images that Google finds on the web. These keywords will be used by the search engine to improve image search results.

Google makes it work like a game. When you click "go", Google pairs you up with another random person who's playing the game too. Google displays the same image to both players. Each player types in keywords that describe the picture. If both players type in the same keyword, then you "win". Another image is displayed and you go at it again. Each round lasts 90 seconds. If you want to play another round then Google pairs you up with a different online player.

To make it more fun, Google gives each player 100 points for agreeing on keywords and reports top players of the day, etc.

Google offers a "pass" button in case you really don't know how to describe a particular picture. After a round is over they also let you see what keywords your partner used.

What about the Jokers out there?

A typical problem with user submitted content is that a few bad apples will screw around with the system. Imagine if I wanted to prove my hacker credentials by putting some strange word, like "dolophopsoism" on every image. Then I brag to my friends, "do an image search on dolophopsoism and see how many images I've screwed with! Ha ha ha!!" When I was 15-years old I probably would have done this too. Sigh.

Google's approach of pairing unknown players together means that they will only accept keywords that two independent users have associated with a particular image. Good idea, but even that only works so well.

I played this "game" about 20 times yesterday. A couple of times my partner and I just couldn't agree on keywords for what I thought was an easy photo. After the round was over I'd look at their guesses to see how we could have missed it.

One time the first image was of an opera house interior, seen from the stage. I thought there were plenty of good keywords: theater, chairs, balcony, orchestra, opera, inside, blue, pink (two colors that were abundant in the picture). We were stalled and the timer ran out. Reviewing our round I found that, surprisingly, my partner had guessed "entrepreneurialism" and "diphosphonate". What? At that point I felt I must have been paired up with some bot that had hit the Google Image Labeler by mistake.

I played some more.

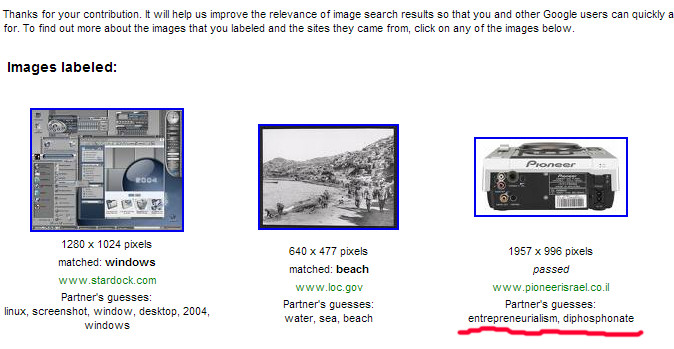

Then another partner and I could not agree on the first photo. This time the photo had the word "pioneer" in it. Surely we would each type that word. I clicked "pass", we went on to two more photos, and the round was over. The result is shown below. Note that the first picture we looked at is on the right.

|

There are those two keywords again! Entrepreneurialism and diphosphonate. How can that be? Notice that after we passed on this image we went on to agree to "beach". The third and last image of this round is a computer screen shot. My partner knew enough about that image to guess "linux", "desktop", and "2004". Hmmmmm.

Now I also remembered wondering how some people could get such tremendously high scores. Here's a shot of the ranking screen.

|

If each correct match scores 100 points, then Podunk Oklahoma has managed to agree with random partners on how to label 39,167 images. Wow. How?

Ah, it is all clear to me now.

Tit For Tat

What if instead of a million concurrent users of Google Image Labeler, there are only a thousand or so online at any one time? Let's assume a friend and I want to screw with the system. How would we do it? You might remember the prisoner's dilemma and the winning strategy? Tit For Tat. Two cooperating players can score a load of points. But how would you cooperate through Google Image Labeler? Use a weird keyword as a rendezvous point.

Here's how I think it works. Describe the first image with "entrepreneurialism". If your random partner also uses "entrepreneurialism" then you know you've hooked up. Just continue to guess "entrepreneurialism" on each image presented and you will keep getting points. In fact, keep "entrepreneurialism" in your clipboard and just paste it in as fast as possible. You could easily label 50 images in a round for a score of 5,000 points.

Now, instead of doing it by hand, do it with a bot. Wow, it would be easy to get almost 4M points as your bots play cooperatively with each other.

But my sample above was clearly not a bot - some human had to guess at those other two images.

I'd like to imagine some kindred soul who noticed the same anomaly. He/she decided to trap a bot by using these same two keywords. When I passed at the first image, they knew I wasn't a bot and then played fairly on the next two images. You might think I'm stretching it here, but notice the guesses on the third image. My partner in this round had to know enough about computers to know that the image was a screen shot of a 2004 Linux desktop. I didn't know that and was guessing "Vista". We eventually agreed on a more generic "windows". My partner in that round was certainly computer savvy.

How to Prevent This Hacking?

Google could stop this little game in a couple of ways. First, by looking for people who guess the same word on all images. A bot maker could avoid this trap by using a prearranged sequence of keywords.

Second, Google could look for players who repeatedly use the same keyword on the first image - clearly a rendezvous strategy. But a smart bot maker could also use a different starting point in the keyword list each time. Perhaps based on synchronized date/time.

Google could look for the same words to be used over and over, but a smart ass-botter could use pages from some project Guttenberg text. Maybe use the bible as seed words.

Another strategy is to realize that real humans can't score 4M points, ever. Google could monitor the rate of play and just disqualify players who play too fast. However, if you disqualify players, then they know the jig is up, and they'll try to work around whatever strategy Google uses to detect them.

Here's a better idea: screw with the asses who run these bots. Google could detect them using one of the above ideas, but don't disqualify them. Instead just always pair them up together. Let them run as fast as they want, doing all the fake image labeling they want. Just discard their results and don't add their scores to the Ranking lists.

Or, even better, quarantine the ip addresses they come from and display a "special" All-time Ranking list to those ip addresses. Populate the list with some arbitrary names so that the bots never appear on the list. Keep bumping up the highest score so it looks like these bot making jerks are not as good as the imaginary other jerks that they see at the top of the list. Hah!

That should keep them busy and maybe the rest of us can go back to improving the real searchability of images.

Me? I'm now going to go back and see if I can catch a bot myself.

How fast can you type diphosphonate?

UPDATE: Playing With the Bots?

I just played the game a few more times. Each time I'd enter

entrepreneurialism but never got a hit. Then one time I got a hit! Here it is.

Remember that the first image is on the right. (I often get the broken image

icon with Google Image Labeler - don't exactly know why.)

|

I kept entering entrepreneurialism, but didn't get any more hits. So it looks like this bot doesn't just use entrepreneurialism on every guess. The pattern for this one would be:

- entrepreneurialism

- carcinoma

- accretion

- stars

- galaxy

- space

- carcinoma

- bequeathing

- forbearance

Now I'm in the habit of reviewing my partner's guesses after each game. I'm not sure about the series below. I thought I was playing with a human because of so many correct matches. However, I see that word: forbearance. How the hell could that apply to the image of the dashboard? Clearly there is more going on here.

|

In the shot below you can see that the image is a model of some kind of

monster. Google Image Labeler does not let you use words that others have

already "agreed" describe the image. Those words are "off-limits." In this

screen shot it looks like the bots have already played with this image twice and

"agreed" that accretion and bequeathing accurately describe it.

|

I have also seen the word "abrasives" used incorrectly several times. So I

decided to start off with that one a few times. A-hah! I caught another

bot:

|

This time we agreed immediately on the first keyword, but I could not get it

to bite on any others.

|

It looks like I'll need to have a whole list of keywords ready to go if I'm going to play with the bots.

Who Are They?

I'm pretty good at this stuff and I only get scores of 900 when it really goes well. In the mean time some of the top All-time scorers can routinely get 1300 or 1400 even when they play a "guest." Sure they do.

|

|

To Catch A Bot

My new strategy is now to play the game normally. However, if we can't agree on the first image, then I quickly pass and try the second image. This allows me to review my partner's guess at the first image and I can see what other weird words come up.

Does it Affect Google Image Search?

So far, I don't think so. I did image searches at Google using some of these words and I didn't see this pollution in the results.